From Photoshop to Prompts: AI and the Ethics of Scientific Fraud

The temptation to manipulate data in scientific research is nothing new. Mildred Cho, David Magnus, and Glenn explored this in Science during the high-profile case of Hwang Woo-suk, whose fraudulent claims about cloning human embryos made global headlines in the early 2000s. That scandal was a turning point—not just for stem cell science, but for the bioethics community. It showed that even in elite labs, scientific integrity could collapse under ambition, nationalism, or pressure to produce world-changing results.

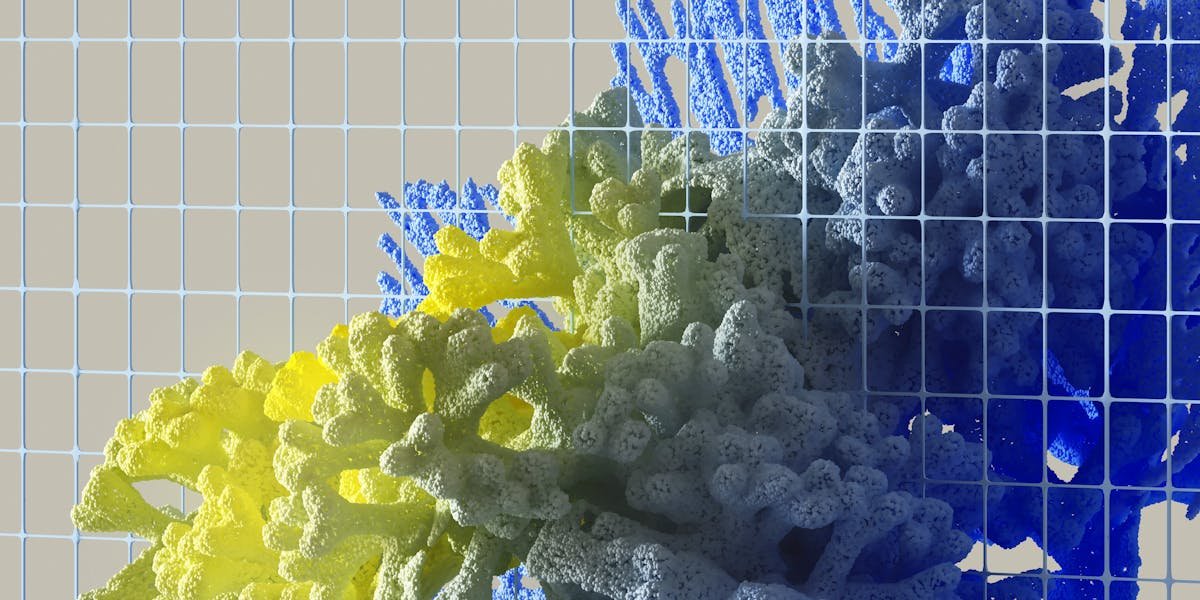

Fast forward to today, and the tools of deception have evolved. In 2024, the journal Science published a commentary warning that generative AI tools like ChatGPT and image generators could be used to fabricate entire studies—complete with fake citations, data tables, and even synthetic microscopy images. This development doesn’t just make cheating easier; it makes it harder to detect. With the right prompt, a researcher could generate convincing but entirely fictional evidence that slides past peer review and onto the public record. (source)

This new era of AI-generated fraud raises old questions with new urgency. How should journals vet submissions? Should labs be required to disclose use of generative AI tools? What kind of transparency—algorithmic or ethical—must accompany this new capability?

Ethics in science must now expand to include both human intent and machine capability. The core issue remains unchanged: research must serve truth, not ambition. But the safeguards we build must now anticipate tools that can lie more fluently—and convincingly—than ever before.

📚 Read the Full Publication

A foundational article from Glenn’s work on scientific fraud:

Lessons of the Stem Cell Scandal – Glenn McGee